Short read: Just click and start

For the brave reader: just click and try the Voice Model Expression Language online.

Long read: Building better JSONs for language models

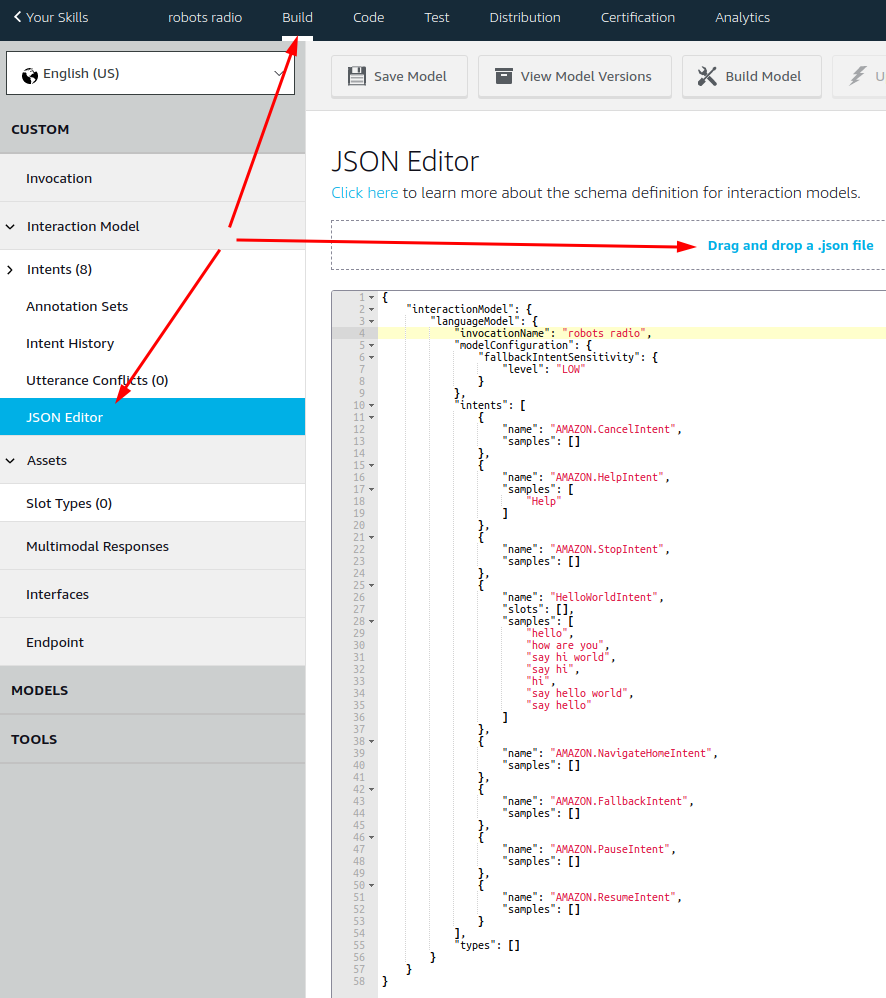

Amazon offers us two approaches to maintain language / voice models: The web UI is fine to get something up and running but after a while you may find yourself working with the native JSON file which you can open from the Build tab in the Alexa Developer Console:

Luckily you do not need to decide for one or the other. You can switch between the two models any time to choose whatever fits best to the next task you have in mind.

The core idea is as follow:

Looking at an everyday use case

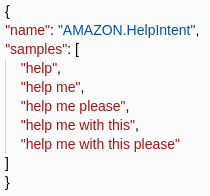

Now here is a question: How often did you write something like this:

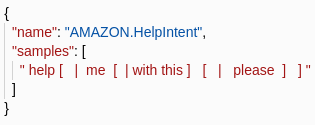

Imagine you could write something like this instead:

Five lines implode to a one liner when we use an expression language which is derived from a syntax some people call „Spintax“ (short for „Spin Syntax“).

Easy Syntax for compact expressions

The syntax suggested here is straightforward:

You distinguish alternations using the symbol ‘|’ and delimit the list using square brackets like „[„ and „]“. An example expression might look like this:

" [ nice | beautiful] "This means either „nice“ or „beautiful“.

One option may be an empty string which is a way to mark variants as optional:

" [ | please] "This means either nothing (= empty string) or „please“.

Expressions may be nested to build up powerful expressions as shown in the introductory example:

" help [ | me [ | please] ] "This means either „help“ or „help me“ or „help me please“.

Looking at a bigger example

The example shown above was only a short one: now guess what you get with the following expression:

"[ | [| would you ] please] [help|support|assist] [ | me [ | with this [| [|crazy|strange] [situation|problem] ] ]]"

I did the work for you: this would result in 81 different sample utterances.

Now imagine you did this manually and it turns out that in addition to „would you“ you also feel like you have to add „could you“ to the list of sample utterances. That would be quite some manual work to do. And watch out that you don’t miss a variation in your list or create duplicates by mistake.

In a rule based environment you would/could just add another variant to the rule:

"[ | [ | would you | could you ] please] [help|support|assist] [ | me [ | with this [| [|crazy|strange] [situation|problem] ] ]]"

Now we have a definition for 108 sample utterances. You don’t believe me?

Be patient: Soon you can try yourself.

What did we see so far

So far we have seen (at least) two possible advantages of this approach:

A compact notation helps to keep a better overview with all the rules you have so far. Up to some point these expressions are easier to handle than a long list of sample utterances.

Adding or removing variations in the utterance lists is much easier to maintain when they are based on expressions compared to doing the work manually.

Model designers will disagree, where to draw the line but this is not the point here. You can decide what works best for you.

How to make use of this approach

You’ll never know if an approach works out in the real world if you don’t give it a try. This is what I plan to do here:

As a user of the Alexa Developer Console we do not have any chance to modify the user interface but given the fact that we have access to the complete JSON describing the voice model we can at least work with that code.

I have been using this technique myself for quite a while now and found out that it really saves me time which I can then invest to focus on more interesting things.

As this might be interesting for other developers too I built a public user interface so that everybody can play around with this idea. You may have a look at the first version at interactionmodel.applicate.de.

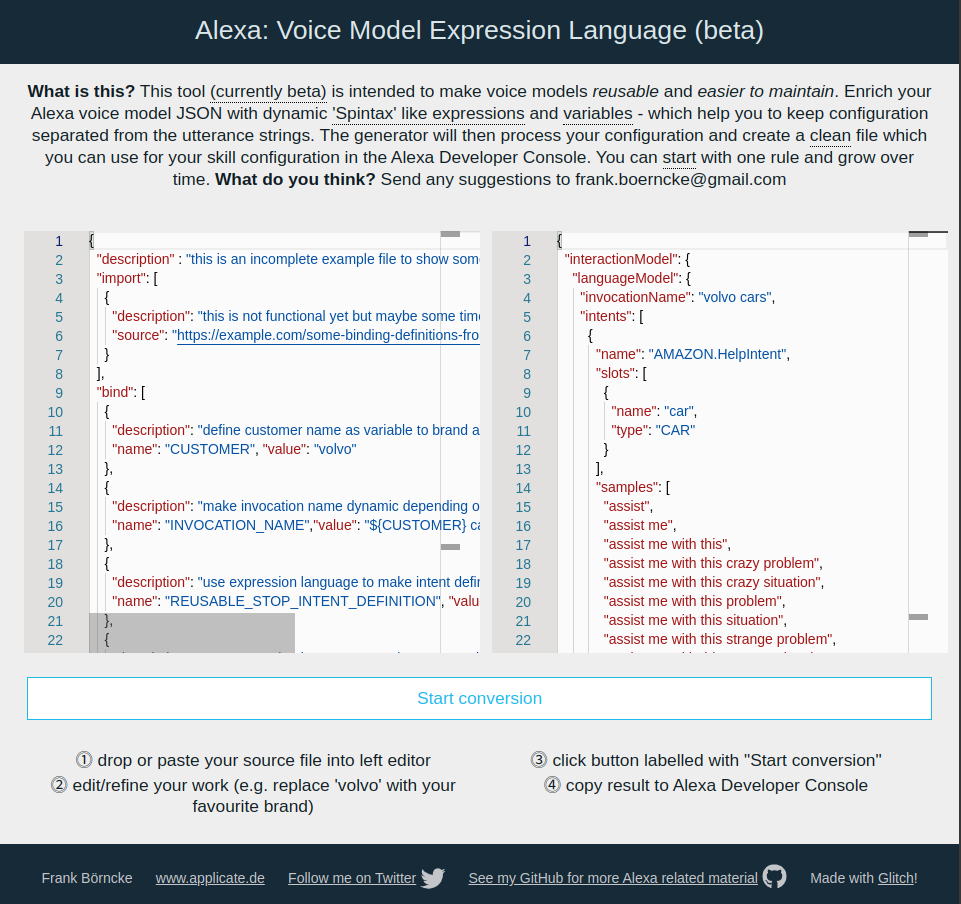

This is what you can expect to see when starting the service:

You see a page with two editors next to each other: you may paste your JSON into the left editor (or drag and drop a file containing the JSON) and you will find a processed result within the right editor window. If the conversion does not start automatically you can start it using the button marked with „Start conversion“ below the editors.

As a basic idea the contents within the right window is intended to completely match the format you can paste back to the Alexa Developer Console (this is not true if you decide to work on single intent definitions or fragments but we get back to this later).

First step

As a starter you may want to copy a complete JSON from the Alexa Developer Console into the left editor and then start the conversion.

Hereby you will get the following:

- The JSON document will be reformatted

- Sample utterances will be trimmed

- Inner duplicate whitespaces withing sample utterances will be eliminated

- Sample utterances will be sorted in ascending order

- The conversion will make sure there are no duplicates within the sample utterance lists

So far the page serves as both a voice model pretty printer and a clean up tool. It may also help you with refactoring you voice model.

This alone makes it worth to give it a try. But there is more to it.

Adding the first rule

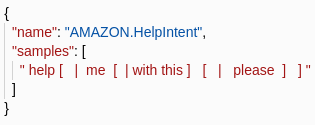

Now you may want to add your first rule to your JSON. I recommend to start with something simple as shown in the example above which I show here again:

Add the one line expression from the example to your AMAZON.HelpIntent, press the button to start the conversion and check the result in the right editor window.

If this works for you then try adding some other rules. You will get used to it and see what makes sense for you.

Try adding an error

Now try and add a syntax error to your JSON. Start the conversion. The tool does its best to detect errors and notifiy you about what went wrong. But don’t expect to much. The editor itself may show you with a red mark where something is wrong.

Before you proceed, remove the error.

Try edit single intent definitions only

This one might not seem obvious first: it is not necessary to work with complete voice model files. If you try you will see that the tool also works with parts of the voice model JSON as long as these parts are syntactically correct JSON constructs.

This means you can use the tool to incrementally build up only one new intent using the expression language. As a second step you could then integrate your result into the big master main JSON.

Homework (level easy): build up an AMAZON.HelpIntent from scratch using a dynamic expression as a stand alone input in the left editor. Start the conversion.

Hint: You will find an example solution in the beginning of this text.

Homework (level advanced): design sample utterances for some AskForHotDrinkIntent.

Diving deeper

Up to now we used the tool for clean up and making handling of voice model json files easier.

But you could do more: if you are brave enough to change your workflow there are additional features waiting for you.

Loading Voice Model Designer for the first time the tool starts already with a sample document showing additional concepts which may make your life easier. But of course you have to decide yourself, if that appears useful for you.

This is what the (optional) header of the file looks like:

"bind": [

{

"description": "define customer name as variable to brand an otherwise identical skill",

"name": "CUSTOMER", "value": "volvo"

},

{

"description": "make invocation name dynamic depending on customer name",

"name": "INVOCATION_NAME","value": "${CUSTOMER} cars"

},

{

"description": "use expression language to make intent definitions reusable",

"name": "REUSABLE_STOP_INTENT_DEFINITION", "value": "[stop|exit|shut up|finish|terminate]"

},

{

"description": "use expression language to make synonym list reusable",

"name": "REUSABLE_CAR_SYNONYM_LIST", "value": "[old| [|brand] new|second hand| used ] [|electric] [[|motor] car| [|motor] vehicle|auto|automobile|transporter|truck|wreck]"

}

]

We will now explain, what all this means and what it might be good for.

Adding Comments to your voice model

What I really miss about JSON is the fact that there is no inbuilt language construct for adding comments to your file. As I believe though that comments are a useful concept even within JSON files I added two variants:

Within JSON objects you may add comments using the element „description“ as follows:

"description": "this is a comment"When running the conversion these parts of the JSON will be removed completely to not disturb the process within the Alexa Developer Console.

Within arrays in the JSON file you may add elements starting with „//\u201c.

[ "help", "// this is a comment and the array element will be deleted on conversion", "help me" ]Again when running the conversion these parts of the JSON will be removed completely to not disturb the process within the Alexa Developer Console. This second option feels a bit „hacky“ to me but it is up to you if you make use of it or not.

Define and use bindings

When working with APL we all learned that binding of variables is a useful concept. This is why I added a feature to define bindings within the header of the file as described in the screenshot above. In short a binding might look as follows:

"bind": [

{

"name": "CUSTOMER",

"value": "volvo"

}

]

What happens here is that the variable CUSTOMER is given the value „volvo“.

Within the following lines in the JSON file wherever there is a string we may use the notation „${CUSTOMER}\u201c multiple times to refer to the value defined in the header.

This will not only work within sample utterances but also for invocation name definitions:

"invocationName": "${CUSTOMER} cars"

This might make sense if you plan to brand the same skill for different customers. So you would copy your main template, set the variables and run the generator for a new version of the skill.

You could also define expression rules in a variable. This might have its use cases if you plan to make definitions reusable by extracting them to a header:

"bind": [

{

"description": "use expression language to make intent definitions reusable",

"name": "REUSABLE_STOP_INTENT_DEFINITION",

"value": "[stop|exit|shut up|finish|terminate]"

}

]

In case somebody asks: yes, you can reuse a binding within the next definition:

"bind": [

{

"description": "define customer name as variable to brand an otherwise identical skill",

"name": "CUSTOMER",

"value": "volvo"

},

{

"description": "make invocation name dynamic depending on customer name",

"name": "INVOCATION_NAME",

"value": "${CUSTOMER} cars"

}

]

I did not mention yet that you can also use both dynamic expressions and variables within the type definitions and for synonym lists:

"bind": [

{

"description": "use expression language to make synonym list reusable",

"name": "REUSABLE_CAR_SYNONYM_LIST",

"value": "[old| [|brand] new|second hand| used ] [|electric] [[|motor] car| [|motor] vehicle|auto|automobile|transporter|truck|wreck]"

}

]

And later:

"types": [

{

"name": "CAR",

"values": [

{

"name": {

"value": "car",

"synonyms": [

"${REUSABLE_CAR_SYNONYM_LIST}"

]

}

}

]

}

]

This would result in:

"types": [

{

"name": "CAR",

"values": [

{

"name": {

"value": "car",

"synonyms": [

"brand new auto",

"brand new automobile",

"brand new car",

"brand new electric auto",

"brand new electric automobile",

"brand new electric car",

"brand new electric motor car",

"brand new electric motor vehicle",

"brand new electric transporter",

"brand new electric truck",

"brand new electric vehicle",

"brand new electric wreck",

"brand new motor car",

"brand new motor vehicle",

"brand new transporter",

"brand new truck",

"brand new vehicle",

"brand new wreck",

"new auto",

"new automobile",

"new car",

"new electric auto",

"new electric automobile",

"new electric car",

"new electric motor car",

"new electric motor vehicle",

"new electric transporter",

"new electric truck",

"new electric vehicle",

"new electric wreck",

"new motor car",

"new motor vehicle",

"new transporter",

"new truck",

"new vehicle",

"new wreck",

"old auto",

"old automobile",

"old car",

"old electric auto",

"old electric automobile",

"old electric car",

"old electric motor car",

"old electric motor vehicle",

"old electric transporter",

"old electric truck",

"old electric vehicle",

"old electric wreck",

"old motor car",

"old motor vehicle",

"old transporter",

"old truck",

"old vehicle",

"old wreck",

"second hand auto",

"second hand automobile",

"second hand car",

"second hand electric auto",

"second hand electric automobile",

"second hand electric car",

"second hand electric motor car",

"second hand electric motor vehicle",

"second hand electric transporter",

"second hand electric truck",

"second hand electric vehicle",

"second hand electric wreck",

"second hand motor car",

"second hand motor vehicle",

"second hand transporter",

"second hand truck",

"second hand vehicle",

"second hand wreck",

"used auto",

"used automobile",

"used car",

"used electric auto",

"used electric automobile",

"used electric car",

"used electric motor car",

"used electric motor vehicle",

"used electric transporter",

"used electric truck",

"used electric vehicle",

"used electric wreck",

"used motor car",

"used motor vehicle",

"used transporter",

"used truck",

"used vehicle",

"used wreck"

]

}

}

]

}

]

What comes next?

Adding imports from file/url

At some point it might be a good idea to keep and maintain parts of the voice model as separate modules which could then be imported from some repository via an URL.

This might look as follows:

"import": [

{

"description": "this is not functional yet but maybe some time we can import bindings for reuse",

"source": "https://example.com/some-binding-definitions-from-a-repository"

}

But up to know this is only an idea.

Run conversion as command line tool

For somebody who develops skills without Alexa Developer Console but mainly from command line only (thinking of the Litexa model for example) it would make sense to make the conversion process available via command line.

Let me know if this is an option for you.

Disclaimer & Caveats

A little disclaimer: as you can see I marked the page as beta. I am pretty sure there are still some bugs around and therefore it is a good idea to make a backup of your JSONs before you start using the service.

I did my best to make this a tool everybody can use but please don’t take it for granted that everything will work as expected.

But when you want to start a discussion you have to start somewhere. I really like to hear/read your feedback about the whole approach. Some concepts introduced here may turn out to work great while I might have overlooked other cases and ideas. Let me know what makes sense for you. What do you miss?

Tell me what you think: frank.boerncke@gmail.com